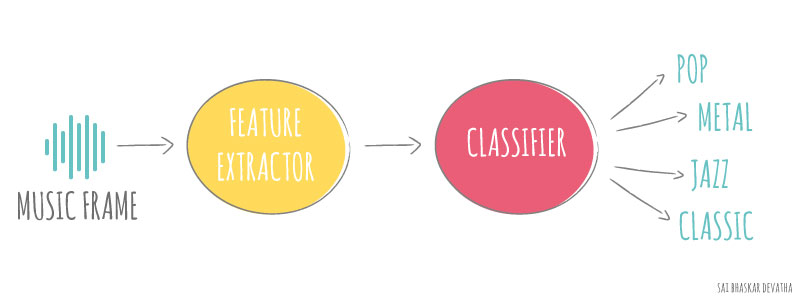

Through this project, we aim to resolve this problem with something very close to people – music. We aim to explore various methodologies used to develop an automatic music genre classifier and thus, help in comparing efficiency to these methods.

Apart from the generic use of classification, it can be further used to better understand audio properties and human perception of music. Moreover, its applications can be extended to develop various systems like music genre-based disco lights and emotion-mapped music.

The decision of the frame rate is extremely critical to the performance of the classifier due to huge variations with time in a music file i.e. the audio might lean more toward one genre at the start and toward another at the end, but the average is that matters the most.

On similar lines, extraction of features which detect subtle differences between the genres are very important to enhance the accuracy of the classifier.

George Tzanetakis, Georg Essl and Perry Cook pioneered in the field and published their work on 9-dimensional feature vector: mean-Centroid, mean-Rolloff, mean-Flux, mean-Zero Crossings, std-Centroid, std-Rolloff, std-Flux, std-Zero Crossings, Low Enegry. They obtained an accuracy of 16 % and 62% with random and Gaussian classifier.[1] Hariharan Subramanian used MFCC, Rhythmic features and MPEG-7 features in addition to the above features.[3] In Music Genre Classification, Michael Haggblade, Yang Hong and Kenny Kao used MFCC and got an accuracy of around 87% with DAG SVM.[4] In Music type classification by Spectral Contrast feature, an accuracy of 82.3% was observed.

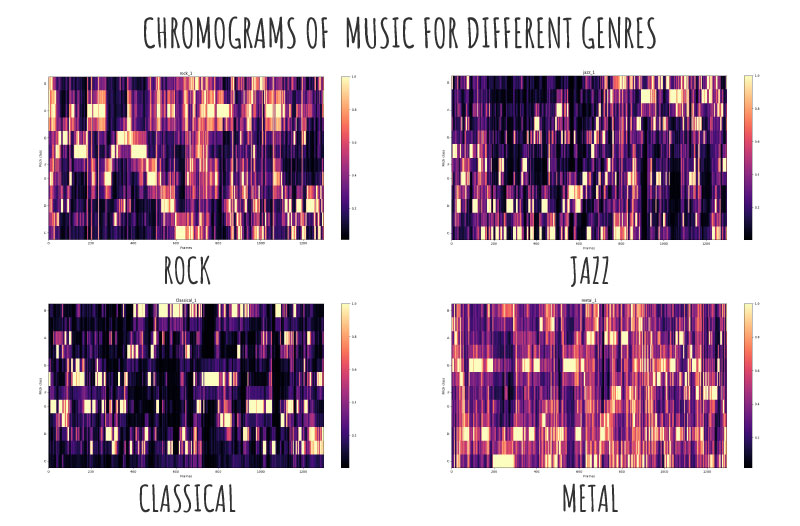

Juan Pablo Bello describes in his paper that most music is based on the tonality system i.e sounds arranged according to pitch relationships into interdependent spatial and temporal structures. And also shows usage of chroma to analyze these relations and therefore understand human perception of music. This inspired us to experiment with chromagram in our automatic music genre classification project.[8]

Humans are remarkably good at genre classification as they can identify genre of a music by 250 milliseconds of an audio. This suggests that genre classification methodology should be as close as possible to human perception of music rather than any higher-level theoretical description. Therefore, here we have used chroma-based features as they are closely correlated to harmonic and melodic aspects of music, while being robust to changes in timbre and instrumentation. Chromagram is also very widely used to analyze and map human perception of music to signal processing techniques. Further, we compared performance of these chroma-based audio features or pitch class profiles with the performance of other features like MFCC, zero-crossing, rhythm based features, etc to establish classification efficiency associated with each.

1. Title and Authors.[Top]

2. Abstract.[0]

3. Introduction to Problem.[1]

4. Proposed Approach.[2]

5. Experimental Results, Project Code and Discussions.[3]

6. Conclusions and Summary.[4]

7. References.[5]

The above order is followed to ensure every finding of us is properly documented.

2.1 Data Preprocessing

2.2 Feature Extraction and Selection

2.3 Classification

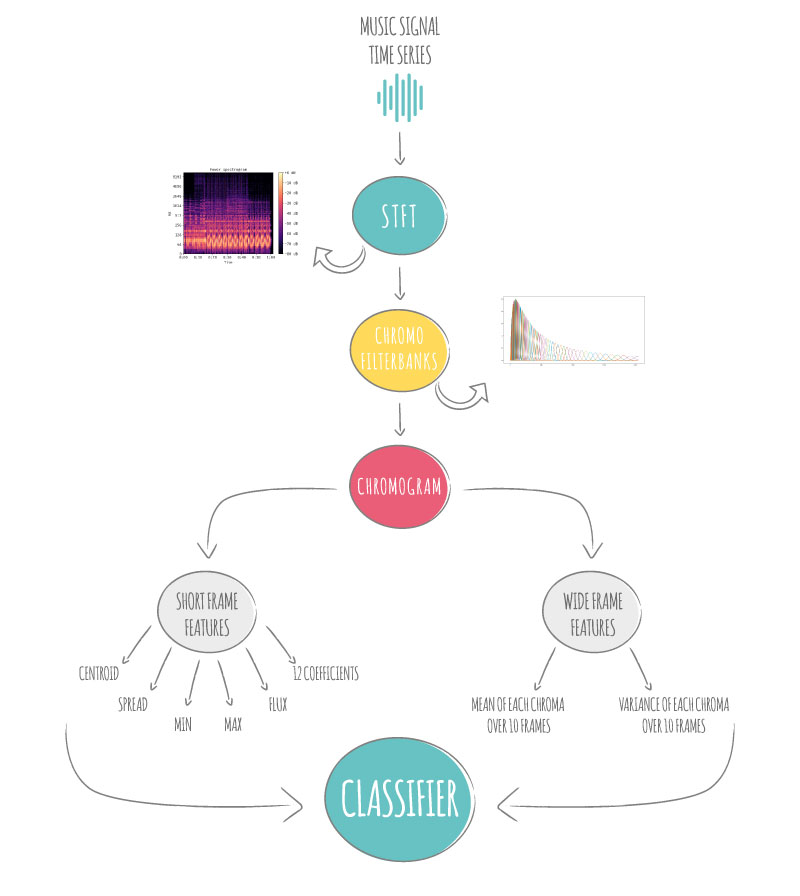

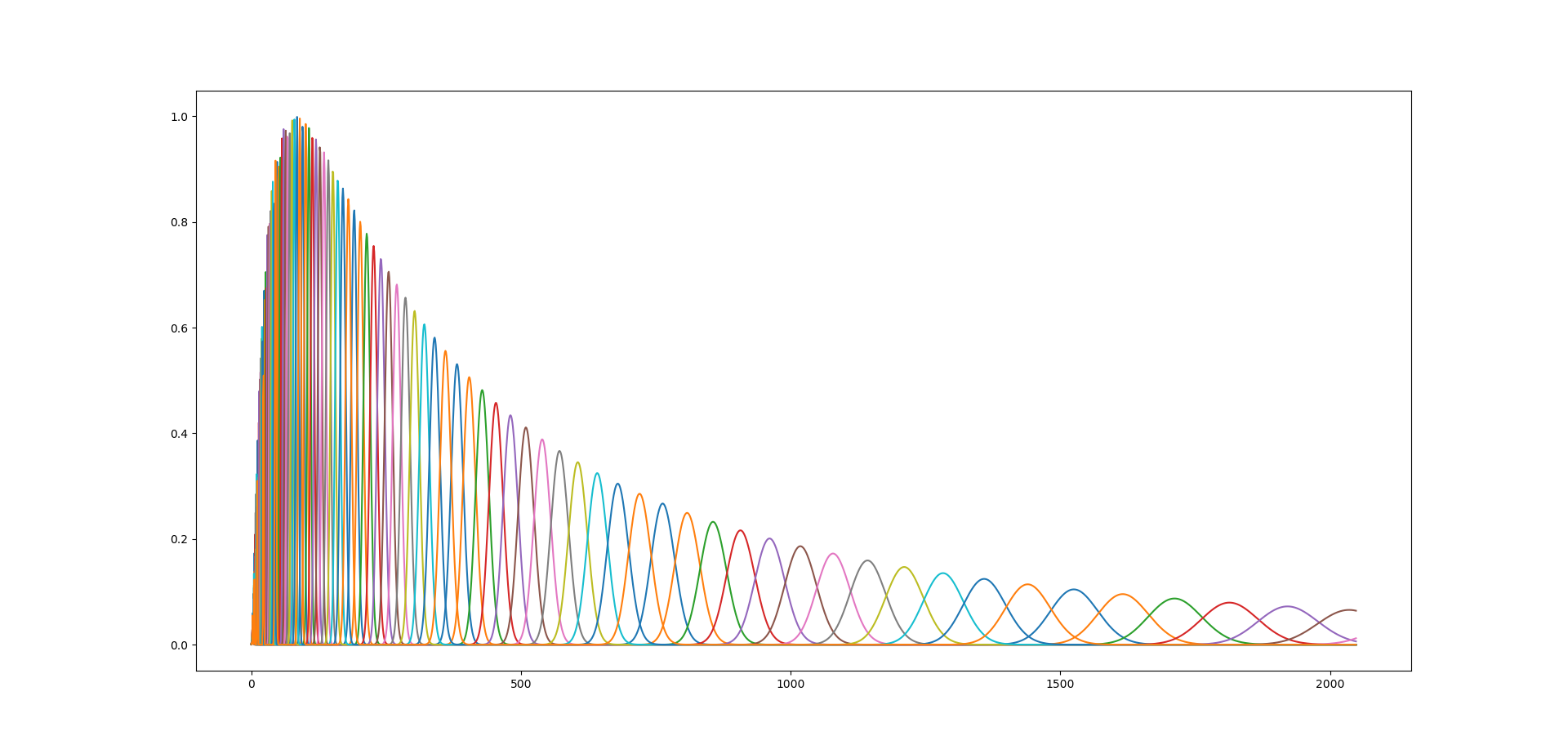

-> Short Time Fourier Transform (STFT) was initially calculated for each frame.

-> This was then passed through a chroma filter to obtain chromagram.

This chromagram was used to calculate features like centroid chroma, chroma spread, Min-Max chroma and chroma flux. In an additional approach, 10 frames were used together to calculate mean energy and standard deviation of each chroma.

Chromagram Basics: Most music is based on the tonality system. Tonality arranges sounds according to pitch relationships into interdependent spatial and temporal structures. Characterizing chords, keys, melody, motifs and even form, largely depends on these structures. The pitch helix is a representation of pitch relationships that places tones in the surface of a cylinder. It models the special relationship that exists between octave intervals. Chroma represents the inherent circularity of pitch organization. Chroma describes the angle of pitch rotation as it traverses the helix. Two octave-related pitches will share the same angle in the chroma circle: a relation that is not captured by a linear pitch scale (or even Mel). For the analysis of tonal music we quantize this angle into 12 positions or pitch classes.

Chroma Centroid: It is the weighted mean of chroma bins where the weights are the energy associated with chroma bins.The centroid is the measure of the spectral shape and higher centroid values correspond to brighter textures with more high frequencies.

\begin{align} Centroid = \frac{\sum_{k=0}^{11} k|C(k)|}{ \sum_{k=0}^{11} |C(k)| } \end{align}

$$ Spread = \frac{\sum_{k=0}^{11} (k-Centroid)^2(C(k))}{\sum_{k=0}^{11} C(k)}$$

Min Chroma: It is the least energy chroma bin.

Chroma Flux: It shows how chroma distribution varies across frames. It can be calculated as follows. The spectral flux is defined as the squared difference between the normalized magnitudes of successive spectral distributions that correspond to successive signal frames.

$$ Flux_r = \sum_{k=0}^{11} |(C_r(k)-C_{r-1}(k))|$$

In the initial attempt we constructed the feature vectors, on which the data was trained, has following features:

1. Chroma Centroid

2. Chroma Spread

3. Min chroma

4. Max chroma

5. Chroma flux

These 5 features were augmented with the 12 chormagram coefficients obtained directly from chromogram making a 17 dimensional feature vector.

Not satisfied with the classification accuracy we increased our aperture of analysis by considering 10 frames with a hop length of 5 frames. We found the mean of each chroma coefficient and its variance over 10 frames thereby in a way observing chroma distribution this gave us 24 dimension feature vector which gave us improved results.

| Classes | Features | Frame Size(No.of Frames) | Classifier | Accuracy |

|---|---|---|---|---|

| Rock, Classical, Jazz, Metal | Cen, Var, Max, Min, Flux | 0.046s(2048) | SVM | 38.8% |

| Rock, Classical, Jazz, Metal | 12 Chroma Coefficients | 0.046s(2048) | SVM | 42.5% |

| Rock, Classical, Jazz, Metal | Cen, Var, Max, Min, Flux, 12 Chroma Coefficients | 0.046s(2048) | SVM | 43.5% |

| Rock, Classical, Jazz, Metal | Mean and Variance of 12 Chroma Coefficients | 0.232s(4096) | SVM | 46.5% |

| Rock, Classical, Jazz, Metal | Mean and Variance of 12 Chroma Coefficients | 0.232s(4096) | SVM | 54.5% |

| Rock, Classical, Jazz, Metal | Mean and Variance of 12 Chroma Coefficients | 0.232s(4096) | SVM Overfit(Gamma = 5) | 55.4% |

| Rock, Classical, Jazz, Metal | Mean and Variance of 12 Chroma Coefficients | 0.232s(4096) | MLP(12,8,6) | 57% |

| Classical, Jazz, Metal | Mean and Variance of 12 Chroma Coefficients | 0.232s(4096) | MLP(8,8) | 68.5% |

| Classical, Jazz, Metal | Mean and Variance of 12 Chroma Coefficients | 0.232s(4096) | SVM | 68.6% |

| Classical, Jazz, Metal | Mean and Variance of 12 Chroma Coefficients | 0.232s(4096) | MLP(6,3,3) | 68.8% |

| Classical, Jazz, Metal | Mean and Variance of 12 Chroma Coefficients | 0.232s(4096) | MLP(12,8,6) | 70.1% |

1. Developing an automatic genre based disco lights system.

2. Automatic Equaliser.

3. Emotion-mapped music player.

[1] George Tzanetakis, Georg Essl and Perry Cook, Automatic Musical Genre Classification of Audio Signals.

[2] MDan-Ning hang, Lie Lu, Hong-Jiang Zhang, Jian-Hua Tao and Lian-Hong Cui, Music type classification by Spectral Contrast feature.

[3] Hariharan Subramanian, Audio Signal Classification.

[4] Michael Haggblade, Yang Hong and Kenny Kao, Music Genre Classification.

[5] Meinard M¨uller and Sebastian Ewert, Chroma Toolbox: MATLAB Implementations for extracting variants of Chroma-based audio features.

[6] Martin Vetterli, A Theory of Multirate Filter Banks.

[7] Carol L. Krumhansl and Lola L. Cuddy, A Theory of Tonal Hierarchies in Music.

[8] Juan Pablo Bello, Chroma and tonality, Music Information Retrieval.

[9] Fredric Patin, Beat Detection Algorithms.

[10] Justin Jonathan Salamon, Chroma-based Predominant Melody and Bass Line Extraction from Music Audio Signals